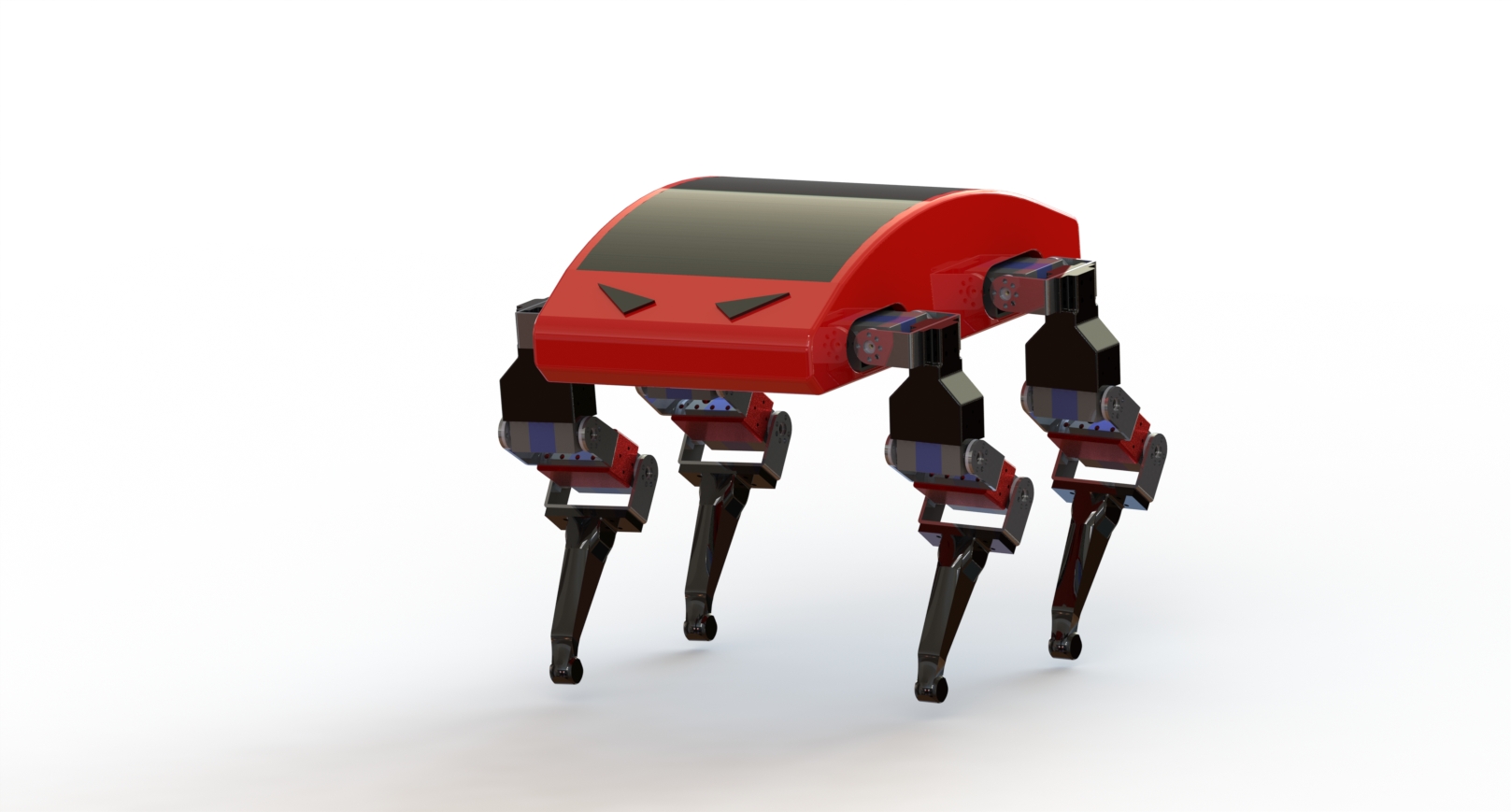

Quadruped

Four Legged Robot

In this project, I led the design and development of a quadruped

robot, which involved complex mechanical, electrical, and software

integration. The goal was to create a robust, four-legged

autonomous robot capable of navigating dynamic environments. Using

CAD tools like Solidworks and PTC Creo, I designed the robot’s

structure, focusing on weight distribution and mechanical

strength. The control system relied on ROS and a custom motion

planning algorithm that allowed the robot to adjust its gait in

real-time based on the environment. I implemented dynamic path

planning to ensure obstacle avoidance and smooth navigation. This

project won a state-level robotics championship and significantly

advanced my understanding of autonomous navigation and robotic

kinematics.

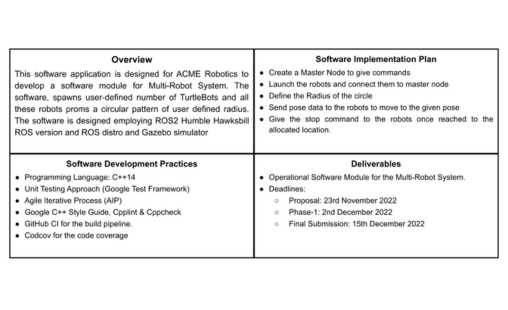

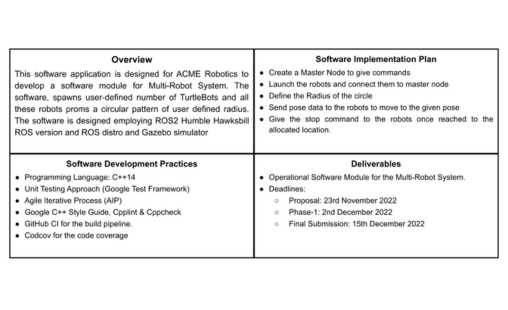

Multi-Robot System Software Module

In this project, I developed a software module for a multi-robot

system, focusing on coordination and communication between robots.

The system was designed to optimize task execution by distributing

tasks among multiple robots, ensuring efficient task completion

with minimal redundancy. I used ROS to manage inter-robot

communication and integrated sensors for real-time data exchange,

enabling the robots to adjust their paths based on the actions of

other robots in the system. This project demonstrated my ability

to build complex systems that require real-time collaboration

between autonomous robots.

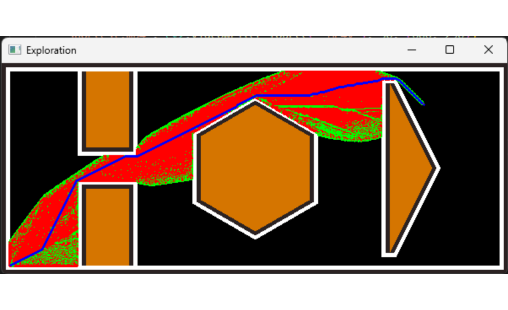

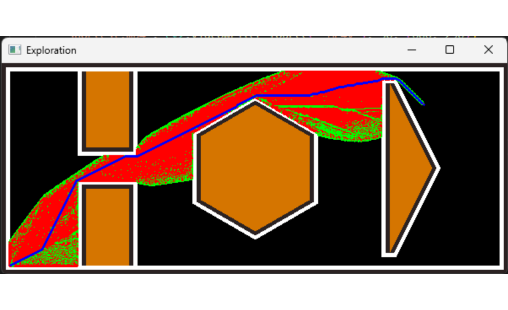

Dynamic Path Planning and Replanning Using RRT* for Autonomous

Robots

This project involved the development of dynamic path-planning

algorithms for an autonomous robot to navigate unpredictable

environments. Using ROS and Gazebo for simulation, I implemented

global path planning using Dijkstra and A* algorithms, while

employing local planners for real-time obstacle avoidance. The

robot successfully navigated a test environment while dynamically

adjusting its path based on sensor input. This project not only

refined my understanding of planning algorithms but also helped me

master ROS and simulation tools like Rviz and Gazebo.

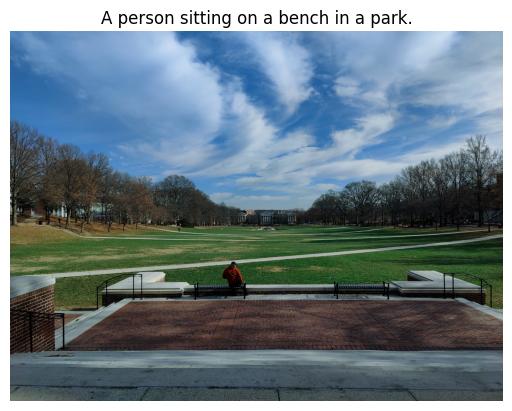

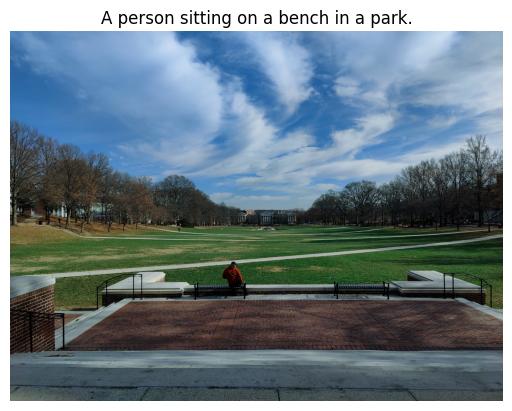

AI-Driven Real-Time Image Captioning for Enhanced

Accessibility

This project aimed to assist visually impaired individuals by

developing an AI-driven image captioning system. I trained a deep

learning model using the Inception V3 CNN encoder for feature

extraction, reaching a 92% accuracy rate in generating descriptive

captions from real-time video feeds. To optimize real-time

performance, I implemented Block Static Expansion and multi-headed

attention vectors, which improved both accuracy and response time.

Additionally, I developed Python scripts for seamless integration

with mobile devices, enabling visually impaired users to capture

and process video in real-time, with automatic caption generation

and voice narration. This project highlighted my ability to apply

AI solutions for real-world accessibility challenges.

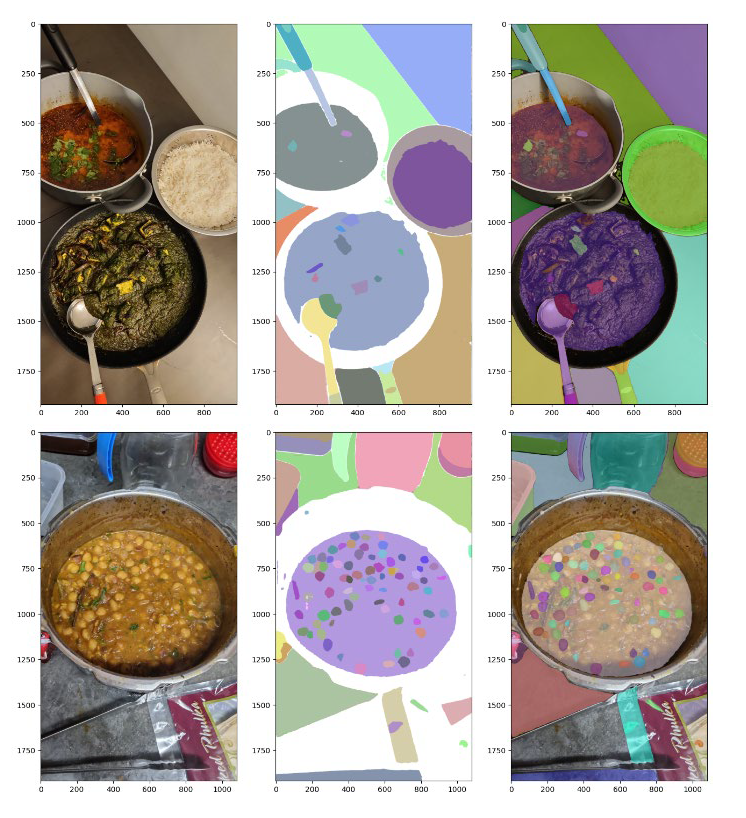

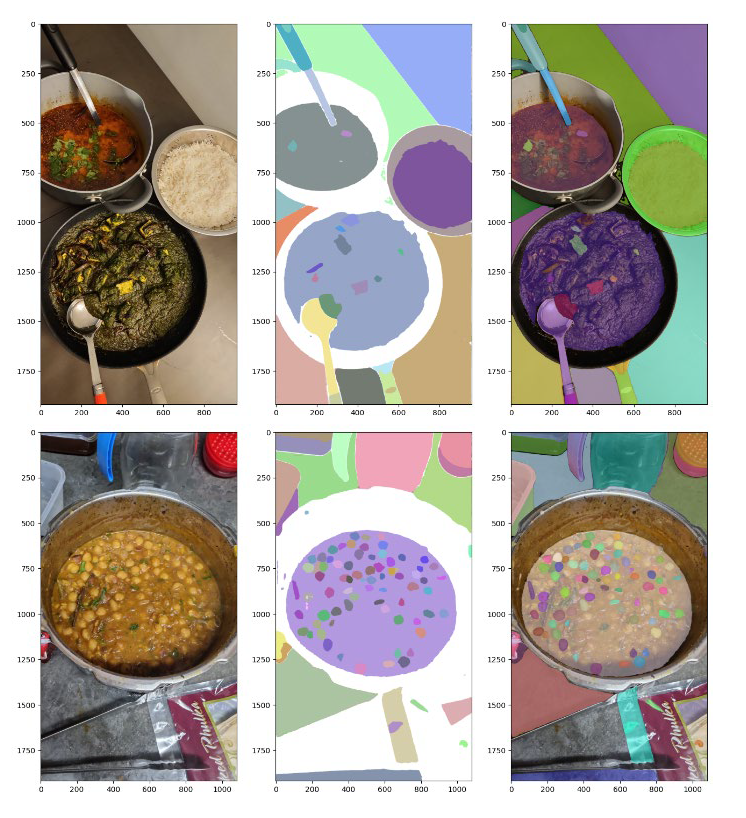

Semantic Segmentation for Real-Time Food Item Recognition

This project revolved around real-time food item segmentation

using deep learning techniques. I employed RNN-based ResNet50 and

PointRend architectures to achieve an 80% accuracy rate in food

item segmentation from live video feeds. Using transfer learning,

I was able to improve model performance, reaching 67% test

accuracy on a limited dataset of 4,935 training images and 2,135

test images, outperforming a custom model’s 43% accuracy.

Fine-tuning pre-trained architectures allowed me to develop a

reliable and efficient segmentation system, which was aimed at

applications in food identification and inventory management in

commercial kitchens.

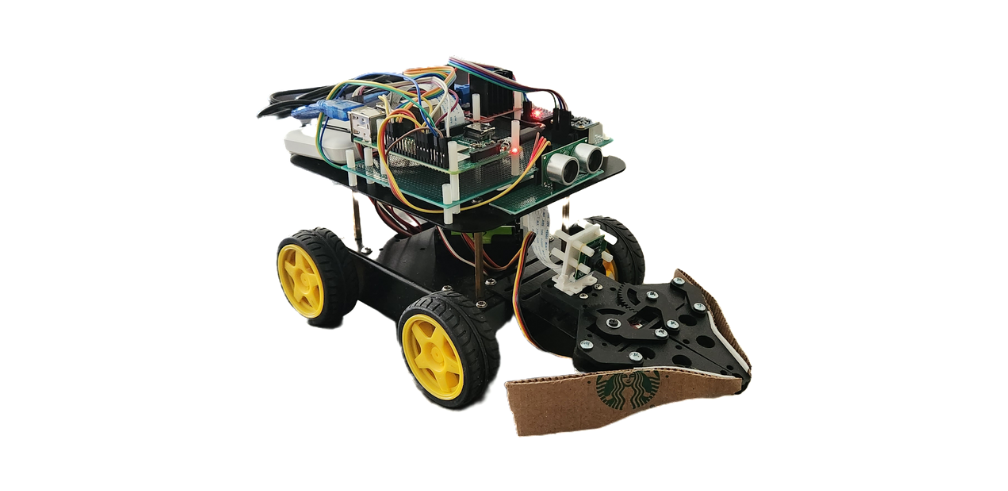

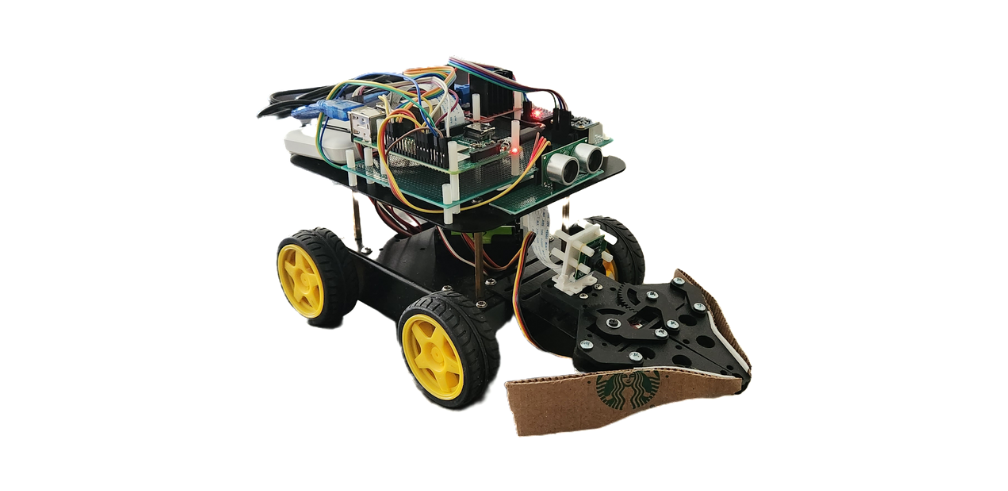

Autonomous

Pick and Place Robot

This project involved the design and development of an autonomous

robot capable of picking and placing color-coded blocks in a

designated area. I engineered a real-time color detection system

using a Pi Camera, which was integrated with the A* path planning

algorithm to ensure accurate retrieval and placement of the

blocks. The robot was equipped with IMU sensors (MPU6050) and

encoders for precise navigation, and I implemented PID control for

stable maneuvering. Obstacle avoidance was achieved through the

use of ultrasonic sensors, allowing the robot to navigate

dynamically changing environments. The project achieved an 80%

success rate in goal placement, demonstrating effective robot

localization and task execution.

Miniature Statue Manufacturing

In this project, I led a team to scan and 3D print miniature

replicas of an 11ft statue to celebrate the 75th anniversary of my

university. We used a 3D scanner to capture the intricate details

of the original statue, and I led the scanning process to ensure

accuracy and completeness of the data. Using Fusion 360, I

performed reverse engineering to create a CAD model from the

scanned data. I then used 3D printing technology to produce

prototypes with different printing parameters, optimizing for

quality, speed, and material usage. The final models were produced

and distributed to over 1000 alumni attendees.

Human Tracking Software Module with Real-Time Object

Detection

This project focused on building a human tracking system using

advanced object detection algorithms in C++. I integrated the

YOLOv3 model for real-time detection, which achieved an 84%

confidence level and 99 ms inference time on the MS-COCO dataset.

I also incorporated a KCF tracker for precise tracking across

164,000 images, enabling reliable performance in dynamic

environments. This project demonstrated significant improvement in

accuracy and efficiency. To further enhance development, I

integrated CI/CD pipelines with Travis CI and Coveralls, which

boosted code quality and testing coverage. The module was used in

a multi-robot system, where the detection and tracking data fed

into autonomous path-planning algorithms for mobile robots.

Perception Project with TurtleBot 3 WafflePi

In this perception-focused project, I worked with the TurtleBot 3

WafflePi to autonomously navigate through a set of waypoints on a

flat surface. My key contribution was calibrating the monocular

camera using a checkerboard pattern to derive intrinsic and

extrinsic parameters. From there, I calculated the homography of

the floor, identified the vanishing point, and determined the

horizon line, enabling the robot to detect and follow waypoints

seamlessly. Additionally, I integrated YOLO-based stop sign

detection, allowing the robot to recognize and respond to stop

signs in varying orientations. This project sharpened my skills in

perception, camera calibration, and robot path planning.

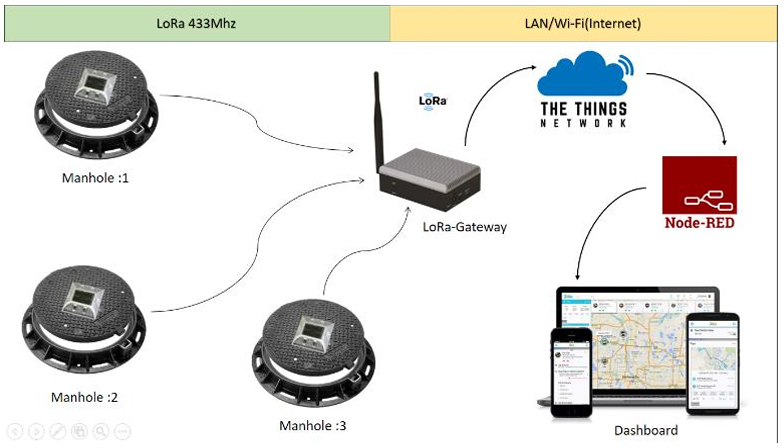

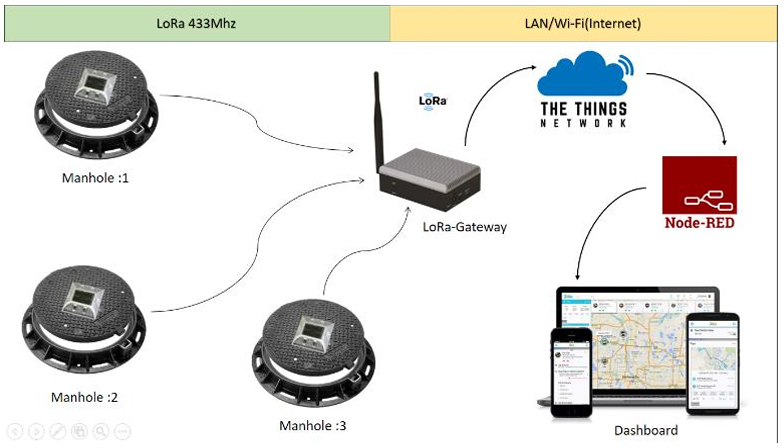

IoT-Based Wastewater Spillage Detection System

I co-invented a patented IoT-based wastewater spillage detection

system designed to monitor and alert authorities of any spillage

in real-time. The system integrates multiple sensors to detect

abnormal spillage patterns, and the data is transmitted via IoT

devices to a centralized system for monitoring and action. This

project combined sensor networks, data transmission protocols, and

environmental monitoring technologies to create a solution that

can have a significant impact on environmental safety.

RESTful API Server for Robot Management System

I developed a RESTful API server to manage a collection of

OpenRAVE robots using Debian , Docker, and Python. This project

aimed to provide a scalable and efficient solution for handling

multiple robot configurations stored in JSON format. I utilized

Python's Flask framework to implement CRUD operations, allowing

for seamless robot data management through endpoints for adding,

updating, and deleting robot specifications. Additionally, I

containerized the API server using Docker to ensure consistent

deployment and compatibility across different environments. The

server could efficiently parse JSON requests, validate robot

parameters, and provide real-time data response, thereby improving

the robotic fleet management workflow. This project deepened my

understanding of REST API architecture, Docker containerization,

and robotic data handling.

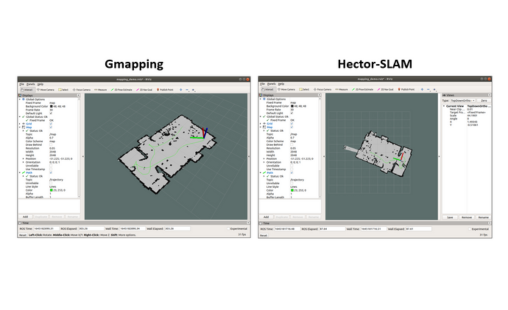

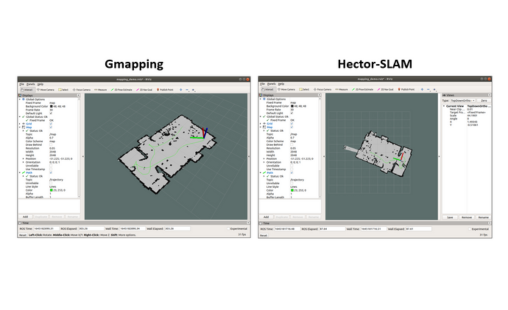

Comparison of 2D Mapping Algorithms

Conducted a comprehensive evaluation of 2D mapping algorithms,

including HectorSLAM, Cartographer, and Gmapping. Mapped a 20,000

sqft hostel floor to validate the study, providing actionable

insights for optimal mapping algorithm selection.

This project involved setting up a robotic control system for a

collaborative robot (FRCobot) using ROS2, leveraging its real-time

communication capabilities with DDS (Data Distribution Service)

for reliable control. I created a ROS2 workspace and developed

custom Python nodes for joint control, sensor processing, and

safety features. Using URDF models, I defined the robot's

kinematics and visualized it in RViz2, ensuring accurate

transformations. I also configured MoveIt2 for motion planning,

enabling pick-and-place and obstacle avoidance tasks, and

integrated Gazebo for simulation to validate control algorithms

before hardware deployment.

Autonomous Rover for Indoor and Outdoor Navigation

I designed and developed an autonomous rover capable of navigating

both indoor and outdoor environments using SLAM-based and

GPS-based navigation, respectively. The rover's indoor navigation

was achieved using the Gmapping SLAM algorithm, which combined

LIDAR and wheel odometry data for precise localization and mapping

of the environment. For outdoor navigation, I integrated a GPS

module that allowed the rover to accurately navigate through

predefined waypoints in open areas. The project also involved

developing a ROS-based control system to seamlessly switch between

SLAM and GPS modes, ensuring continuous navigation without manual

intervention. I implemented an Extended Kalman Filter (EKF) to

fuse sensor data, achieving high accuracy in localization and

reducing drift over time. Despite constraints on funding and a

tight timeline, I sourced aluminum materials and fabricated custom

mounts to house the sensors and electronic components, maintaining

a balance between durability and weight. The final prototype

demonstrated robust autonomous navigation in a mixed environment,

showcasing my proficiency in integrating SLAM, GPS, and ROS, as

well as expertise in sensor fusion techniques for practical

robotic applications.